AlphaZen is CNN generative AI.

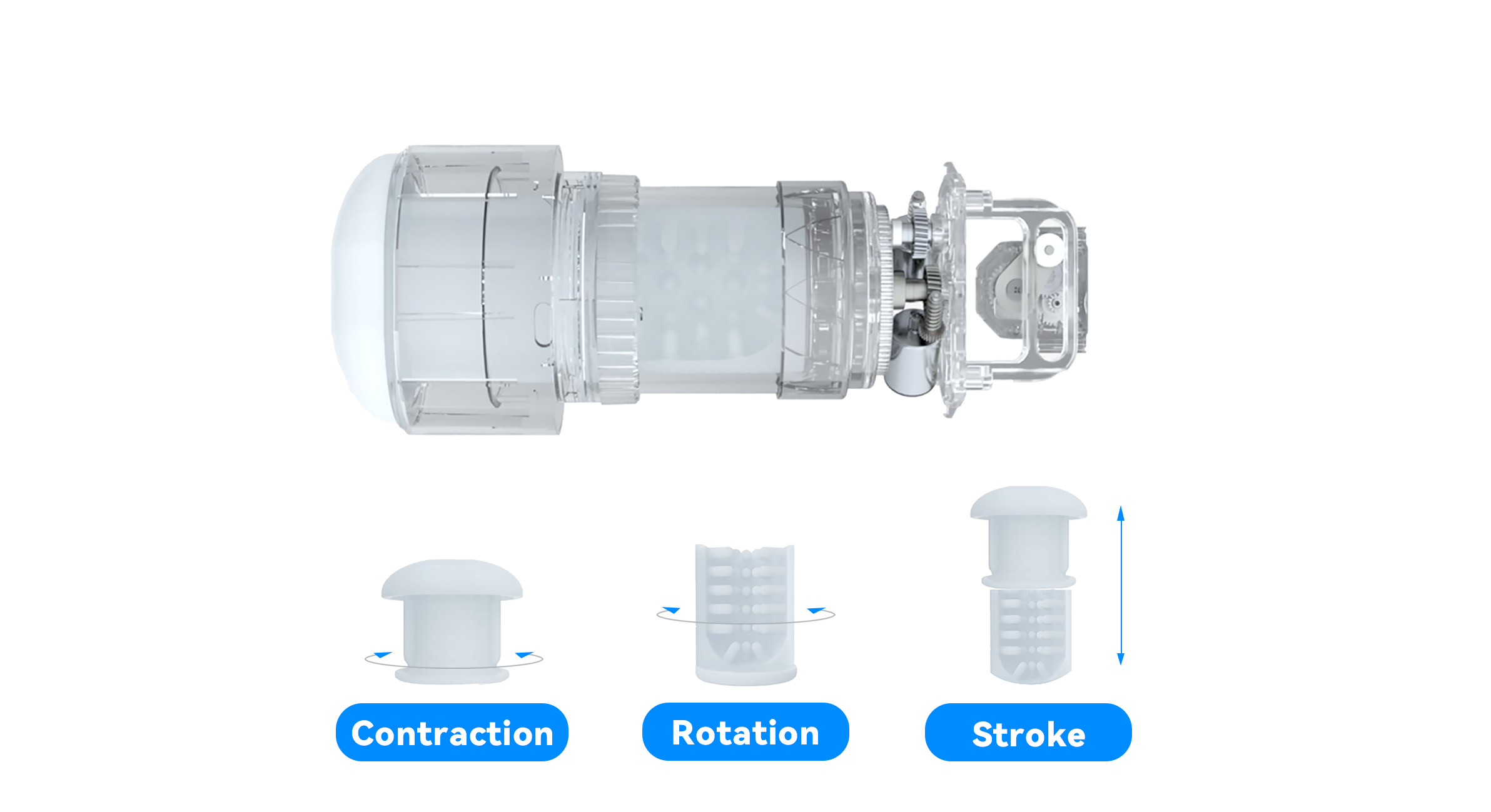

Technically speaking, Syncbot is a drone without propellers, it is actually a specialized drone not designed for taking videos while flying, but to massage people’s pennis based on the video being watching. This drone can let you feel exactly how the actor feel in the video played in Syncplayer.

Since Syncbot is specialized, the hardware and software of Syncbot are different from regular drones or ordinary robotics. Our software programs contain a video player (SyncPlayer)obviously, but a more profound difference is SyncPlayer comprising an ingenious innovative cascade neural networks - AlphaZen.

While the basic PID foundation of drones is not much different with Syncbot, the origin of these software is totally different. The core algorithm of a drone’s software is an adaptive flight control Algorithm system such as PPZ, APM, CC3D, NAZA. But the core algorithm of a Syncbot consists of AlphaZen - a NVP (natural vision processing) AI system based on neural networks.

Since the Syncbot is designed to serve most non-particular people no matter what their s*x/gender are, what their preference are or what their social economy political status are, AI versatility is required.

AlphaZen should be able to process nonspecific video contents on various of PC and at least 90% correctly deal with self-made s*x tape, professional books and periodicals, JAV, Ebony, interracial, gays, straights, mp4, wmv, RMVB with variety of reinforce from RTX2080Ti, RTX3090 or I7, I9 etc. And the only thing in common of all these factors is they could be all about visual. That’s the reason why Natural visual is the key to let AlphaZen somehow understand what’s going on in the scene finally.

In the very beginning of doing research for AlphaZen 5 years ago, it is like discovering an uncharted water.

Our demand contains many sub functions that’s not been developed yet. Some field is not ploughed by the mainstream academic community or tech giants at all.

Previous studies had done much research of identifying human organs (of face majorly) and limbs and based on these studies the tech companies could help people to looks more attractive in photos or find out criminal behaviors in monitors however there is almost no AI research organizations mainly focus on specific scenes. For example, the actual motion of an actor could be dramatically varied even in a 10-second close-up, in these kinds of scenes, each penetration might go deeper or shallower slightly or obviously. Sometimes both subjects are moving, sometimes the pennis are moving while the pussy remain still or vice versa. If the Syncbot want to out put the depth as deep as it was in the scene, AlphaZen should trigger its sub module of “depth estimation of irregular piston movements” and avoid being interfered by zoom-in or zoom-out in the same time.

AI Depth estimation of irregular piston movements in a visual scene is a research gap. Previous algorithm can recognize one thing on another thing and tell them apart such as a nose on a face, but to estimate the depth of piston movements required new method that can comprehend the situation that one thing get into another thing. This could break down into several sub modules, computer should be taught several concepts separately:

- What is a ***** visually on screen?

- What is the ***** or **** visually on screen?

- What is an exposed part of a whole thing?

- What is a sheltered part of a whole thing?

- How to estimate length or depth of a sheltered part based on previous frames?

After that a typology of scenes also need to be put into the cascade network, this typology should be about s*xual positions, skin colors, orgy,etc. When it comes to ********************, AlphaZen furthermore has a module to analyze the tongue, the lips and the facial surface to identifying behavior of sucking or other thing.

However, you will also find many screens that in which the key segments can’t be observed directly, this situation occurs with regular situation alternatively. When the key segments can’t be observed obviously our NVP majorly rely on 2 sub modules, one is focus on the location movements of estimated skeleton structure, the other one is an comprehensive atmosphere perception system we called CAP. CAP is always trying to figure out the fun pleasure level that the director wants to show. Interesting? CAP would not only observing the facial expression and hearing moaning made by people in the screen, but also would take other facts into a comprehensive consideration, such as the change of camera position, shooting angle, or even language. Since humanity are not entirely aware of the specific detail of AI’s work at a terminal. We are not 100% sure how should we improve this part in the future.

To apply all these into practical field we need not only researchers but also hundreds of AI annotators to tag metadata so neural networks could be taught to do the machine learning. This job is simple but not easy, it is simply hard. It is not possible to contract out this job in Hong Kong because of the limited budget and high cost. We have to try to organize this work across borders to some developing areas (including Golden triangle LAOS part). During the years of work, we’ve keep seeking outsourced annotation supplier and select dependable ones. We helped them to raise an army of annotators. And after years of work, tons of expenses, we wind up almost bankrupt, meanwhile we finally bring AlphaZen to a practical level. You can pick any video and sync it, fell it easily now. To be honest, I think our AI is one of the best AI in the world. But our Annotation coordinate team is the very best in the world.

According to the existing data, as of September 2022, AlphaZen has been studying the videos for accumulated about 3.82 million hours non-stop! And the mobile hard drives which is filled by the videos and data of annotation and machine-learning have already filled up two rooms.

No matter CAP or NVP or AlphaZen are all on going concepts, with the AI study of computer science keep marching forward our little pace keep following. AlphaZen today still has its own limits and drawbacks, such as it might:

- not perform as well as straight videos when dealing with *** videos;

- not perform as well as most of *** scenes when dealing with **** close ups

- occasionally do wrong calculation (in rare cases).

But we would keep moving forward and AlphaZen would become better weeks by weeks, and it is to be expected to achieve leap in the next version.

We would like to show our gratitude to all the people who had made any effort to AI domain, no matter you are an annotator from India or a PHD from HK or a donor from US, it is your contribution that make AI - this new domain a better branch of learning and a better innovative industry. We know we couldn’t achieve nothing without the foundation and inspiration of all the previous work from AI cause as a whole. But with your helps, with Valor & Toughness, may AI help humanity more and more, may technology liberate individuals.

Leave a comment

All comments are moderated before being published.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.